Generically Dockerizing Linux VMs

Alternatively: How I Dockerized My Old Linode VPS With Almost No Changes

Jan 21st, 2021 · 12 min readIt's 2012. You have this amazing new service to launch! So of course, you start getting it to run within a docker container and evaluate tools like Kubernetes to manage deploys of it. Oh wait...

Technically, it's not like containers didn't exist in 2012. However, it is true that Docker didn't exist yet in 2012. Also, things like puppet and chef also existed. However, you make the relatively sane decision to get things up and running quickly on a manually managed VPS. Arguably, maybe not a terrible decision even today for some things. You treat it like the snowflake it is; getting everything setup on it and creating scripts on the fly right on the VPS.

It works! It runs for years with minimal to no problems. You even get some customers and make a little profit.

Years pass. Customers start to leave. You slack on those package updates...

It's now 2020. All your customers are gone. You've moved on to other things. You have not updated the packages in years. Somehow it's all still running and seemingly not hacked. Every time you think about decommissioning it, you get sad at the idea of never being able to turn it on again. You think you maybe could get it running locally. Then you realize it's using PHP 5, MySQL 5, and you barely remember how it all works. The last thing you want to do is port some old software for nostalgia's sake.

You happen to have chosen Linode way back and wonder if there's a way to run the VM image locally. After some research, it's looking pretty dubious, but maybe possible?

What if you could run the image in a docker container?! Also, what if you could dockerize it generically without really understanding how the configuration on the VM works? You could quickly spin it up and toy with it to your heart's content. Once you're done, you shut it down, and it's back to the same state it was before.

You do some naive searches on the topic. It's a combination of dubious approaches and people telling you you don't understand Docker.

You ignore them. No one can stop you. You want to quickly spin up some nostalgia via a simple docker run command. You're too lazy and don't have enough time to re-figure out how to get it running otherwise. You also want to finally decommission that VPS so you can can host this blog and other projects on it...

Downloading Your VM

We'll cover this from the perspective of Linode, but everything is more or less relevant to any VPS provider. Most of this also applies if you are trying to dockerize a local VM.

Regardless of the approach you take, you'll want to reboot your Linode into recovery mode first. This will ensure you get a pristine copy of your VM without having to worry about files changing.

Once you've rebooted, launch the web console. From there we can set a root password, mount the filesystem and start an SSH server so we can transfer data out.

passwd

mount /dev/sda /media

service ssh startNow, open up a terminal locally and kick off a transfer of your VPS's root.

sudo rsync -a --numeric-ids root@linode-server-host-or-ip:/media linode-vmBy using rsync, we can simply re-run the command to pick up where we left off if we loose our connection.

Why sudo and numeric-ids? On Linux, only root can write files that belong to a different user. This means we are forced to use sudo in order to preserve file ownership. numeric-ids is used because the users and groups on your VM likely do not match what you have locally. We also want to ensure that file ownership, once we dockerize it, corresponds to the id mappings we had in our original VM.

Can't we do this other ways?

You sure can! However, there are always pros and cons. If you have enough storage available locally, I think the above is the simplest approach you can take, especially if you have even the slightest worry you could loose your connection during the transfer. If your VPS provider offers a way to simply download an image of your VM, that also may be better (Linode does not). Some other approaches to consider though:

Create a tarball server-side

You can avoid issues with file ownership by taring everything up server-side and then transferring it. This may actually be ideal if you have enough space available on your VPS to do so, since it is less error-prone.

Transfer the disk as an image

Instead of mounting /dev/sda, we could do something like this from our local machine instead to transfer the entire image:

ssh root@linode-server-host-or-ip "dd if=/dev/sda " | dd of=/somewhereLocally/linode-vm.imgPros:

- We get a true archive of our disk we can directly mount.

- No need to worry about messing up file ownership.

Cons:

- No way to pick up where we left off if the transfer fails.

- If your disk was 100GB, but there is only 10GB of data on it, the image is still going to be 100GB.

- You could pipe

ddintogzip, but you'll have to extract it in order to mount it anyway. - You could shrink the disk, zero out empty space, and or use

conv=sparseon dd. However, all these approaches involve going through hurdles.

- You could pipe

- You'll have to mount the image locally in order to create the docker image regardless.

- Docker import does not magically let you import ext4 disk images directly.

- Some tools exist for working with ext4 images without mounting them, but it will likely be more trouble than it is worth.

SquashFS for those short on space

If you're short on space, you could combine any of the techniques above with SquashFS. This could let you do things like transfer a raw image via dd into a new compressed SquashFS image and then mount it without decompressing it. Apart from complexity, there may be other pitfalls with this approach.

This AskUbuntu answer may help if you decide to go this route. However, I have not 100% verified it, but at a glance it looks right.

Dockerize it?

cd linode-vm/media

sudo tar -c . | docker import - dockerized-linode-vmSudo again?! Just like before, in order for file ownership to be preserved, we must use sudo. However, you will not need sudo ever again after this. If you are skeptical, here is the docker documentation telling you to do this.

Otherwise, easy right? Not so fast...

docker run --name dockerized-linode-vm --rm -i -t dockerized-linode-vm bashYou will get an interactive shell, but none of your services will be running. Also, systemd doesn't really work:

systemctl list-unit-files --type=target

Failed to get D-Bus connection: Failed to connect to socket /run/systemd/private: No such file or directoryAt this point though, you could run binaries and scripts directly and they may work for your purposes. However, it'd be much more useful if it ran more like how it originally did.

Patch it so it boots like it did as a VM

We're going to assume your VM was using systemd. In my case, it was a rather old version of systemd that was missing newer things I expected. If your vm was not using systemd though, the general idea of what we're doing here will be the same. Your millage may vary.

In the previous section, we created a dockerized-linode-vm container. We'll build upon that to patch the filesystem to be bootable within the container:

FROM dockerized-linode-vm

ENV container=docker

STOPSIGNAL SIGRTMIN+3

ADD ./patch-filesystem.sh ./

RUN ./patch-filesystem.sh

ENTRYPOINT [ "/sbin/init" ]Let's run through everything line-by-line:

ENV container=docker

This line identifies to systemd that it is running within a docker container. This may be important if you want to run your container without --privileged (especially important if you plan to deploy it in production). Unfortunately, in my case, the version of systemd I was using was too old and did not seem to have any of the new systemd patches that allow it to run in containers without --privileged.

STOPSIGNAL SIGRTMIN+3

By default, docker will send a SIGTERM to pid 1 to shut it down. The signal used can be overridden by STOPSIGNAL though.

We must override the stop signal because systemd does not exit on SIGTERM. Instead, it defines the shutdown signal as SIGRTMIN+3.

ENTRYPOINT [ "/sbin/init" ]

Normally, when the VM boots, /sbin/init is executed first. /sbin/init will coordinate the boot process of your machine and start systemd.

However, simply running /sbin/init is not enough.

Firstly, it must run as pid 1. Pid 1 is special in several ways:

- If pid 1 dies, all other process in the container will be killed.

SIGTERMandSIGINTwill not result in PID 1 being killed. Instead, it is up to PID 1 to listen for these signals and handle them appropriately. In this case, it would orchestrate the shutdown of all the services it spawned in the container, then die.- Any zombie processes within the container will be reaped by PID 1.

TLDR: pid 1 is typically reserved for the init service, or in docker land, some service that explicitly handles SIGTERM and SIGINT.

To ensure /sbin/init runs as pid 1, we must ensure that we use the exec form with ENTRYPOINT. Otherwise, /sbin/init would be executed as a subcomand of /bin/sh -c, and thus not be PID 1.

patch-filesystem.sh

This script is used to remove services we do not want to run within our dockerized VM. Since there is already a host system, we don't want to start up any services pertaining to things like networking or filesystems.

#!/bin/bash

set -e

shopt -s extglob

cat /dev/null > /etc/fstab

(

cd /lib/systemd/system/sysinit.target.wants/

for i in *; do

[ $i == systemd-tmpfiles-setup.service ] || rm -f $i

done

)

rm -f /lib/systemd/system/multi-user.target.wants/*

rm -f /lib/systemd/system/local-fs.target.wants/*

rm -f /lib/systemd/system/sockets.target.wants/*udev*

rm -f /lib/systemd/system/sockets.target.wants/*initctl*

rm -f /lib/systemd/system/basic.target.wants/*

(

cd /etc/systemd/system

rm -f getty.target.wants/* local-fs.target.wants/* sysinit.target.wants/*

cd multi-user.target.wants

rm -f !(httpd.service|mysqld.service|nagios.service|postfix.service)

)The script above is relatively generic and will remove pretty much everything you would not want running in the container while keeping everything else. The exception to this is on line 27:

rm -f !(httpd.service|mysqld.service|nagios.service|postfix.service)Here I declared several services I explicitly wanted to keep. You can modify this line as needed to keep what you want.

Build & Start Your Patched Container

docker build -t patched-dockerized-linode-vm .You can then run it like so, modifying your exposed ports as needed:

docker run -d \

--privileged \

-p 8080:80 \

--tmpfs /tmp \

--tmpfs /run \

-v /sys/fs/cgroup:/sys/fs/cgroup:ro \

--name patched-dockerized-linode-vm \

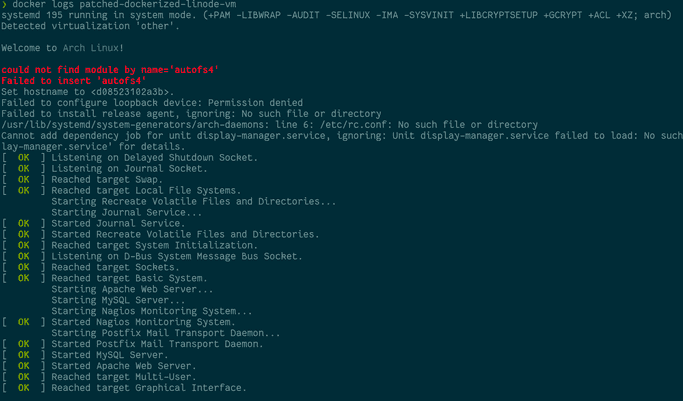

-t patched-dockerized-linode-vmNow, if you look at the logs, you'll see it has successfully booted:

docker logs patched-dockerized-linode-vm

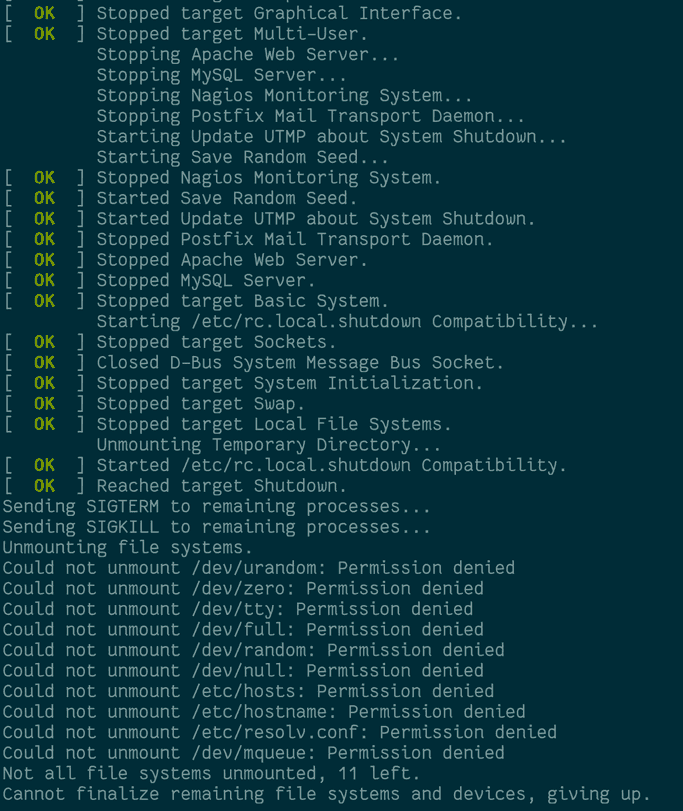

If you stop the container, it'll also shutdown gracefully:

docker stop patched-dockerized-linode-vm

docker logs patched-dockerized-linode-vm

Why don't I see any of my boot up logs?

You may not see the boot up output like shown above. In fact, I didn't. I believe this is because my version of systemd does not understand the container=docker environment variable.

When the system boots, it attempts to attach console output to /dev/tty1. /dev/tty1 typically represents the console output you see on your monitor.

By default, docker does not attach a tty. However, you can ask docker to add a virtual tty by adding -t to your docker run command. You usually use this when you want to open an interactive console on your docker container. It turns out, you can use it with -d. After adding -t, you should now see your boot up and shutdown logs when you call docker logs.

On newer versions of systemd, you should hopefully not have to use -t as long as you set the container=docker environment variable.

Do I need --privileged

If the version of systemd on your VM is new enough, you should not need it with the above instructions. I would try without it first.

So, should you actually do this?

Well, maybe. Especially if the version of systemd is new enough and you avoid the use of --privileged, this could be a great way to temporarily move an old service into something more flexible. Also, using systemd in general as an init system for a brand new container is not a terrible idea either. If you do have to use --privileged though, you should exercise more caution. Especially so if the VM you dockerized is using rather old packages.

References

- https://developers.redhat.com/blog/2016/09/13/running-systemd-in-a-non-privileged-container/

- This was one of the most in-depth technical resources I found on this topic. It's also a great resource for understanding how you can use systemd with any container in general.

- https://serverfault.com/a/825027

- The inspiration for

patch-filesystem.shwas taken from here. However, I made a few modifications. It may be a helpful reference if my script doesn't do it for you.

- The inspiration for